Beyond 'Good Enough': Why Your AI Content Strategy Needs Continuous Fine-Tuning

AI Summary

The article explores why continuous fine-tuning is essential for AI content strategies to stay competitive and effective.

- Who: Business leaders and content strategists aiming to optimize AI-generated content

- What: The importance of ongoing AI model fine-tuning and feedback loops to improve content quality and performance

- When: Whenever content performance declines or market conditions shift, supported by data-driven triggers

- Where: In dynamic digital marketing environments and competitive industries leveraging AI for content

- Why: To maintain relevance, outperform competitors, increase engagement, and drive measurable business growth through adaptive AI models

You’ve seen what generative AI can do. It can produce blog posts, product descriptions, and ad copy in minutes, and the initial results are often impressive. But a critical question soon follows for any serious business leader: how do we maintain this quality and, more importantly, how do we improve it to consistently outperform competitors?

Many businesses fall into the static AI trap. They find a few good prompts, generate a wave of content, and consider the job done. This approach treats AI as a simple tool, not a strategic asset. The problem is that markets shift, customer needs evolve, and search engine algorithms are constantly updated. Content that was relevant six months ago may underperform today, and a model that isn't learning is a depreciating asset.

The Static AI Trap: When Initial Results Become Future Roadblocks

Relying on a fixed set of prompts or a generic, out-of-the-box AI model is like navigating with an old map. It might get you started in the right direction, but you'll eventually hit unforeseen roadblocks. For the 79% of corporate strategists who see AI as critical for competitive differentiation, a static approach simply isn't an option. Without a system for continuous improvement, your content engine will lose its edge, failing to deliver on the key benefits of AI, such as creating new revenue streams and personalizing customer experiences.

This is because the digital ecosystem is anything but static. Your content must adapt to:

- Evolving Search Intent: The questions users ask and the answers they seek change over time.

- Algorithm Updates: Search engines refine their ranking factors to reward higher-quality, more relevant content.

- Competitive Pressure: Your rivals are also using AI, and the bar for excellence is constantly rising.

A "set it and forget it" strategy ignores these realities, leading to diminishing returns and a growing gap between your content and your audience's needs.

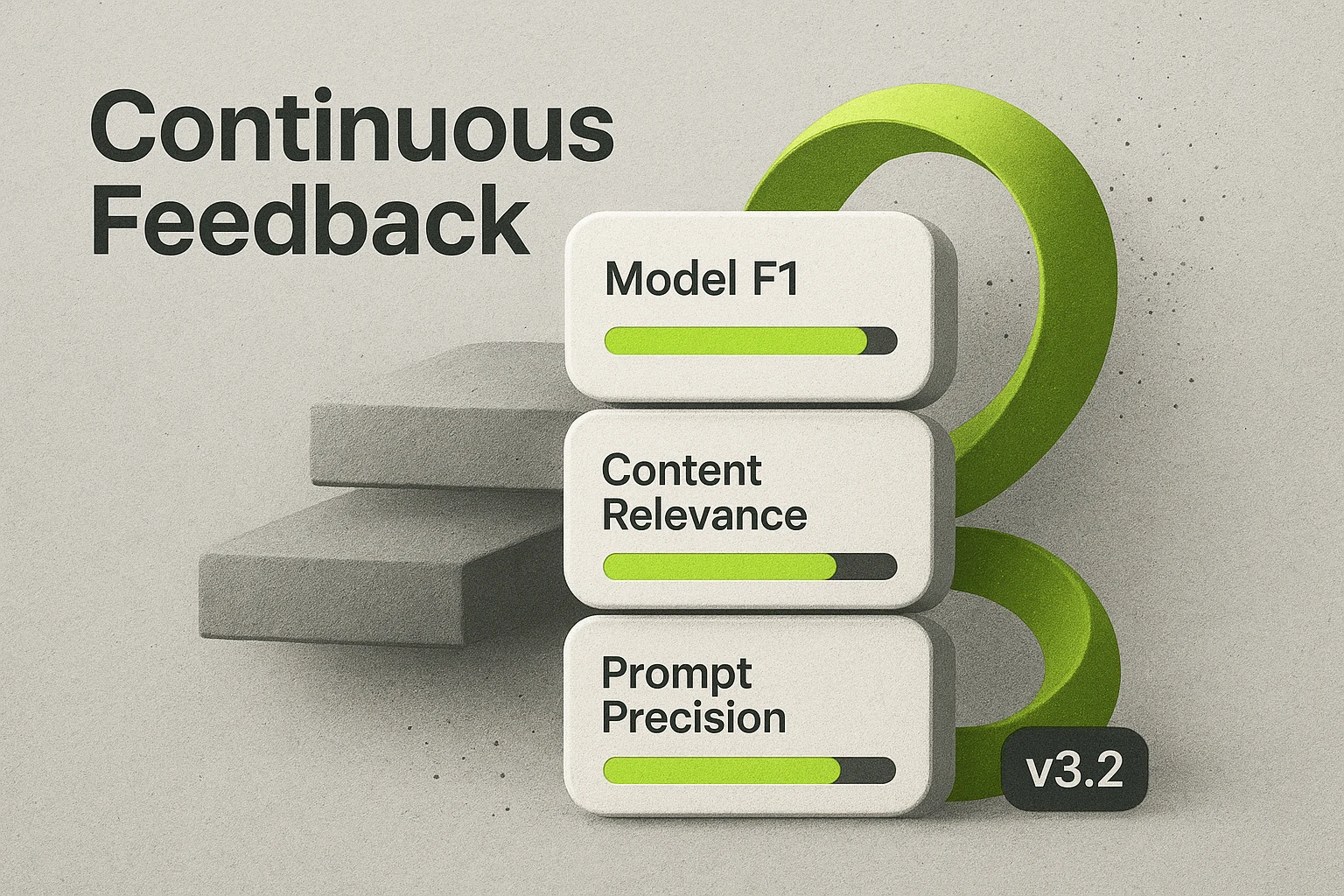

Building an Intelligent Content Engine: The Power of the Feedback Loop

The most successful AI content strategies are not static. They are dynamic, self-optimizing systems built on a continuous feedback loop. This iterative process transforms a simple content generation tool into an intelligent engine that learns from its own performance.

The process is straightforward yet powerful:

- Deploy: Generate and publish content using the most refined models and prompts currently available.

- Measure: Track key performance indicators like organic traffic, user engagement, bounce rates, and conversion events.

- Analyze: Use this real-world data to identify high-performing content and uncover areas of weakness or irrelevance.

- Refine: Feed these insights back into the system to fine-tune the AI model and optimize prompts for better results.

This cycle ensures your content strategy doesn't just keep pace with the market. It anticipates and adapts, becoming a reliable engine for growth.

Fine-Tuning vs. Prompt Engineering: A Critical Distinction for Decision-Makers

As you evaluate different AI solutions, it is vital to understand the difference between prompt engineering and model fine-tuning.

Prompt engineering involves crafting better instructions for a general-purpose AI model. It’s a crucial skill, but it has limits. You are still working with the model's existing knowledge base.

Model fine-tuning, on the other hand, is the process of retraining an AI model on a specific, curated dataset. This dataset includes your best-performing content, brand guidelines, and proprietary data. According to research from Stanford University's Institute for Human-Centered AI, a smaller model trained on high-quality, curated data can significantly outperform a larger, more generic model.

Fine-tuning doesn't just refine the output. It fundamentally realigns the model with your unique business context, voice, and objectives. This creates a deeply customized asset that is far more powerful and defensible than a library of clever prompts.

The Measurable Impact of Systematic Retraining

The theoretical benefits of fine-tuning are compelling, but the practical results are what truly matter. A study from MIT Technology Review found that organizations that systematically retrain their models see a 50% improvement in model performance over time compared to those that do not.

This isn't just a technical metric. It translates directly into business value. Generative AI is projected to add up to $4.4 trillion in annual economic value, with a significant portion coming from marketing and sales applications. Continuous improvement is how you capture your share of that value.

Improved performance means content that ranks higher, engages more deeply, and converts more effectively. It’s the difference between content that simply exists and content that drives revenue.

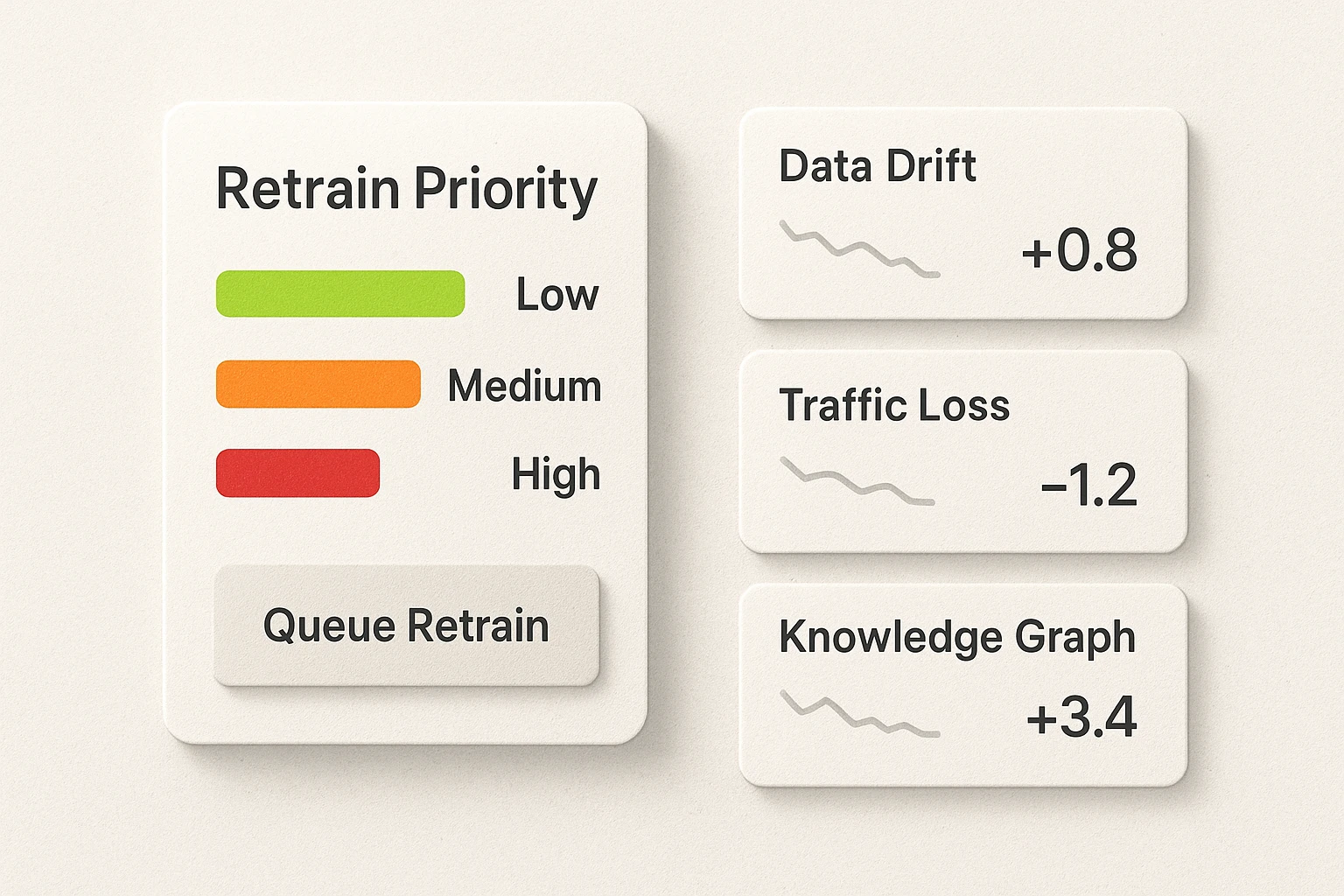

How We Prioritize and Execute Model Optimization

Effective fine-tuning isn't random. It’s a deliberate, data-driven process. Instead of guessing what needs to be improved, we use performance signals to identify and prioritize optimization opportunities. This ensures that every retraining cycle delivers the maximum possible impact on business goals.

We monitor a range of signals that indicate when a model or content strategy needs adjustment:

- SERP Volatility: Sudden changes in search rankings for your target keywords.

- Performance Decay: A gradual decline in traffic or engagement for a specific content cluster.

- New Business Goals: A company pivot, new product launch, or entry into a new market.

- Emerging Keywords: New search terms that signal a shift in customer intent.

These signals are translated into clear, actionable priorities. This allows our team to focus on the highest-impact activities, whether that means retraining a model to capture a new topic cluster or refining prompts to better align with a new brand voice. An expert AI SEO Strategist manages this entire lifecycle, ensuring your content engine is always operating at peak performance.

Adapting to a Dynamic Search Landscape

Ultimately, the goal of a sophisticated content strategy is to build a durable connection with your audience. Salesforce research shows that 73% of marketing leaders believe generative AI will help them better personalize customer experiences. Continuous model improvement is the mechanism that makes this possible at scale.

A self-optimizing content engine doesn't just react to change. It learns from every interaction, steadily improving its ability to deliver the right message to the right person at the right time. This adaptability is crucial for long-term success. It ensures your content remains a valuable asset that builds authority and drives growth. The ultimate objective is to connect every piece of content back to tangible business outcomes, answering the critical questions that drive strategy, such as, can we estimate LTV from prompt-driven lead sources? This level of insight is only possible with a dynamic, learning system.

Frequently Asked Questions

How often should AI models be retrained for content?

It’s not about a fixed schedule. The best approach is data-driven, based on performance triggers. Retraining should occur when you detect a decline in content effectiveness, when business goals change significantly, or when there are major shifts in the search landscape. A continuous monitoring system is more effective than a rigid calendar.

Is fine-tuning expensive and time-consuming?

It is an investment, but the alternative is far more costly. Publishing irrelevant or underperforming content wastes resources and damages your brand's authority. Our approach streamlines the process, making it an efficient investment in a compounding asset that delivers increasing returns over time.

What kind of data is needed for effective fine-tuning?

The best results come from a diverse dataset. This includes quantitative performance data like traffic and conversions, qualitative inputs like customer feedback, and a library of your best human-written content that exemplifies your brand voice and expertise. The quality of this data is more important than the quantity.

Your Next Step Toward a Self-Optimizing Content Engine

The initial promise of AI content generation has been fulfilled. Now, the competitive advantage will go to those who move beyond simple generation and build intelligent, self-optimizing systems. A static content strategy is no longer viable in a dynamic digital world.

If you are ready to build a content engine that learns, adapts, and consistently delivers business results, it's time to explore a more strategic approach. Schedule a consultation to discuss how we can help you implement a continuous improvement framework for your AI content strategy.